Bulk dataset/Downloads

This type of computational access is where an organization makes its material available through a data download. The organization creates a dataset from its material (this may be all of its collections, or a sub section of them), processes it and then makes it possible for users to download it through an online interface or portal. These datasets are normally available in CSV (Comma Separated Values) and JSON formats, as they are ubiquitous and easy to read by both humans and computers. Read more about the CSV and JSON formats here: Our Friends CSV and JSON.

This type of access gives an organization a lot of control over the material, as it sets the parameters of what is being made available. However, this approach also requires a lot of maintenance, as the dataset will need to be updated and uploaded manually. Versioning is also something to take into consideration. For single files or downloads this may not be as problematic, but when working with large amounts of data this can be important, as results may differ from version to version, depending on what has changed and why. Even if providers cannot retain all versions of the data, users should be encouraged to correctly cite the version they have used to aid in potential reproducibility.

Once the users download the file, they will have to set up their own environment and decide what they want to do with this material. Archivists may see this as the easiest way to make data computationally accessible as it fits well with existing concepts of access and use. They are used to packaging and storing information for users to request and access and the bulk datasets approach could be seen as a very similar process.

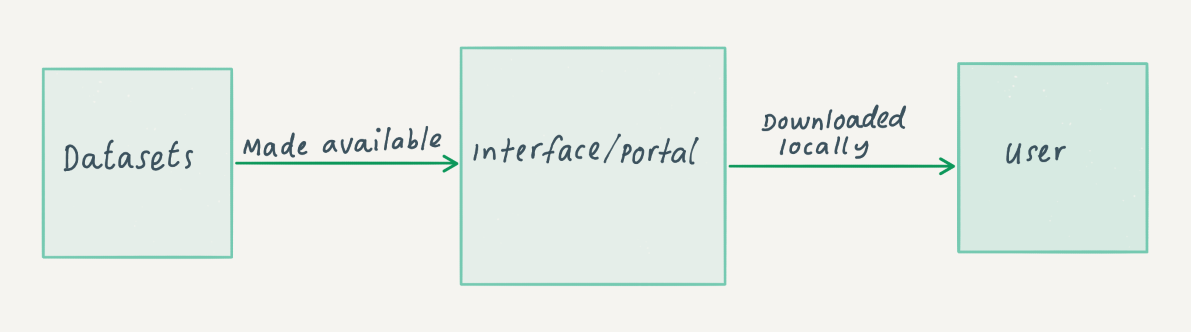

The diagram below illustrates the simplicity of the bulk datasets approach. An organization makes a dataset available through an interface or portal. A user can then download this dataset to work with.

There are different ways of providing access through bulk datasets. The type of material made available as datasets may differ; some organizations will only make their metadata available in bulk, whereas others include both the data and metadata. The hosting of the datasets does not necessarily have to be done by the organization itself. It may decide to upload this material to a third-party provider. For example, large datasets from the Museum of Modern Art (MoMA) in New York are hosted through GitHub; these files are automatically updated monthly and include a time-stamp for each dataset.

A similar approach is taken by Pittsburgh’s Carnegie Museum of Art (CMoA) which also has a GitHub repository; however, this one is updated less regularly.

OPenn is another repository for datasets, specifically archival images. It is managed by a cultural heritage institution which provides access to its own material as well as material from contributing institutions.

A slightly different approach was taken by the International Institute of Social History (IISH) in the Netherlands, which uses open-source software to make its datasets accessible. A slightly modified version of Dataverse is hosted on their website.

The table below showcases a variety of ways in which the bulk dataset model has been applied at different organizations including the following:

-

Are data or metadata (or both) are made available?

-

Is data updated and versioned?

-

Which file formats are available?

-

Where is it hosted?

|

Organization/Project |

Data/Metadata |

Versioning |

Updated |

Terms of use |

Downloadable format |

Type of Data |

Hosted on |

|

Both |

Yes |

Yes |

Yes |

Available through rsync |

Unstructured book files |

Own website |

|

|

Metadata |

Yes |

Yes |

Yes |

Several formats through GitHub |

Metadata of collection |

GitHub |

|

|

Both |

Yes |

Yes |

Yes |

Depends on the dataset |

Structured research datasets |

Own website with use of Dataverse |

|

|

Both |

No |

No |

Yes |

CSV and JSON |

Object from museum |

GitHub |

|

|

Both |

No |

Yes |

Yes |

CSV, TIFF and TEI |

High Resolution archival images |

Own website |